一. OpenStack部署

1. 环境准备

主机名 IP 磁盘 CPU memory controller 网卡1:10.0.0.10,网卡2:不配置 sda:100G 2C 6G compute01 网卡1:10.0.0.11,网卡2:不配置 sda:100G ,sdb:20G 2C 4G compute02 网卡1:10.0.0.12,网卡2:不配置 sda:100G ,sdb:20G 2C 4G

操作系统 虚拟化工具 Ubuntu22.04 VMware15

2. 配置离线环境

# 解压

3. 环境准备

3.1 配置网络

cat > /etc/netplan/00-installer-config.yaml << EOF

cat > /etc/netplan/00-installer-config.yaml << EOF

cat > /etc/netplan/00-installer-config.yaml << EOF

3.2 配置主机名并配置解析

hostnamectl set-hostname controller.mxq001

hostnamectl set-hostname compute01.mxq001

hostnamectl set-hostname compute02.mxq001

cat >> /etc/hosts << EOF

3.3 时间调整

# 开启可配置服务

# 安装服务

# 安装服务

3.4 安装openstack客户端

apt install -y python3-openstackclient

3.5 安装部署MariaDB

apt install -y mariadb-server python3-pymysql

cat > /etc/mysql/mariadb.conf.d/99-openstack.cnf << EOF

service mysql restart

mysql_secure_installation

3.6 安装部署RabbitMQ

apt install -y rabbitmq-server

创建openstack用户

用户名为:openstack

密码:1qaz@WSX3edc

rabbitmqctl add_user openstack 1qaz@WSX3edc

允许openstack用户进行配置、写入和读取访问

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

3.7 安装部署Memcache(用来缓存tonke的)

apt install -y memcached python3-memcache

vim /etc/memcached.conf

service memcached restart

4. 部署配置keystone

控制节点操作

创建数据库与用户给予keystone使用

# 创建数据库

apt install -y keystone

# 备份配置文件

su -s /bin/sh -c "keystone-manage db_sync" keystone

调用用户和组的密钥库

这些选项是为了允许在另一个操作系统用户/组下运行密钥库

# 用户

在Queens发布之前,keystone需要在两个单独的端口上运行,以容纳Identity v2 API,后者通常在端口35357上运行单独的仅限管理员的服务。随着v2 API的删除,keystones可以在所有接口的同一端口上运行5000

keystone-manage bootstrap --bootstrap-password 1qaz@WSX3edc --bootstrap-admin-url http://www.controller.mxq001:5000/v3/ --bootstrap-internal-url http://www.controller.mxq001:5000/v3/ --bootstrap-public-url http://www.controller.mxq001:5000/v3/ --bootstrap-region-id RegionOne

编辑/etc/apache2/apache2.conf文件并配置ServerName选项以引用控制器节点

echo "ServerName www.controller.mxq001" >> /etc/apache2/apache2.conf

service apache2 restart

cat > /etc/keystone/admin-openrc.sh << EOF

source /etc/keystone/admin-openrc.sh

openstack project create --domain default --description "Service Project" service

openstack token issue

5. 部署配置glance镜像

控制节点操作

创建数据库与用户给予glance使用

# 创建数据库

openstack user create --domain default --password glance glance

openstack role add --project service --user glance admin

openstack service create --name glance --description "OpenStack Image" image

openstack endpoint create --region RegionOne image public http://www.controller.mxq001:9292

apt install -y glance

# 备份配置文件

su -s /bin/sh -c "glance-manage db_sync" glance

service glance-api restart

# 下载镜像

6. 部署配置placement元数据

作用:placement服务跟踪每个供应商的库存和使用情况。例如,在一个计算节点创建一个实例的可消费资源如计算节点的资源提供者的CPU和内存,磁盘从外部共享存储池资源提供商和IP地址从外部IP资源提供者。

创建数据库与用户给予placement使用

# 创建数据库

openstack user create --domain default --password placement placement

将Placement用户添加到具有管理员角色的服务项目中

openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement

openstack endpoint create --region RegionOne placement public http://www.controller.mxq001:8778

apt install -y placement-api

# 备份配置文件

su -s /bin/sh -c "placement-manage db sync" placement

service apache2 restart

root@controller:~# placement-status upgrade check

7. 部署配置nova计算服务

7.1 控制节点配置

# 存放nova交互等数据

openstack user create --domain default --password nova nova

openstack role add --project service --user nova admin

openstack service create --name nova --description "OpenStack Compute" compute

openstack endpoint create --region RegionOne compute public http://www.controller.mxq001:8774/v2.1

apt install -y nova-api nova-conductor nova-novncproxy nova-scheduler

# 备份配置文件

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

# 处理api服务

7.2 计算节点配置

compute01节点

安装nova-compute服务

apt install -y nova-compute

# 备份配置文件

# 确定计算节点是否支持虚拟机的硬件加速

service nova-compute restart

compute02节点

安装nova-compute服务

apt install -y nova-compute

# 备份配置文件

# 确定计算节点是否支持虚拟机的硬件加速

service nova-compute restart

7.3 配置主机发现

openstack compute service list --service nova-compute

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

vim /etc/nova/nova.conf

service nova-api restart

root@controller:~# openstack compute service list

8. 配置基于OVS的Neutron网络服务

8.1 控制节点配置

# 创建数据库

openstack user create --domain default --password neutron neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking" network

openstack endpoint create --region RegionOne network public http://www.controller.mxq001:9696

cat >> /etc/sysctl.conf << EOF

modprobe br_netfilter

sysctl -p

apt install -y neutron-server neutron-plugin-ml2 neutron-l3-agent neutron-dhcp-agent neutron-metadata-agent neutron-openvswitch-agent

# 备份配置文件

# 备份配置文件

配置openvswitch_agent.ini文件

# 备份文件

# 备份文件

# 备份文件

配置metadata_agent.ini文件

提供元数据服务

元数据什么?

用来支持如指示存储位置、历史数据、资源查找、文件记录等功能。元数据算是一种电子式目录,为了达到编制目录的目的,必须在描述并收藏数据的内容或特色,进而达成协助数据检索的目的。

# 备份文件

vim /etc/nova/nova.conf

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

service nova-api restart

ovs-vsctl add-br br-ens34

ovs-vsctl add-port br-ens34 ens34

# 提供neutron服务

8.2 计算节点配置

cat >> /etc/sysctl.conf << EOF

modprobe br_netfilter

sysctl -p

apt install -y neutron-openvswitch-agent

# 备份文件

配置openvswitch_agent.ini文件

# 备份文件

vim /etc/nova/nova.conf

service nova-compute restart

ovs-vsctl add-br br-ens34

ovs-vsctl add-port br-ens34 ens34

service neutron-openvswitch-agent restart

cat >> /etc/sysctl.conf << EOF

modprobe br_netfilter

sysctl -p

apt install -y neutron-openvswitch-agent

# 备份文件

配置openvswitch_agent.ini文件

# 备份文件

vim /etc/nova/nova.conf

service nova-compute restart

ovs-vsctl add-br br-ens38

ovs-vsctl add-port br-ens38 ens38

service neutron-openvswitch-agent restart

8.3 校验neutron

root@controller:~# openstack network agent list

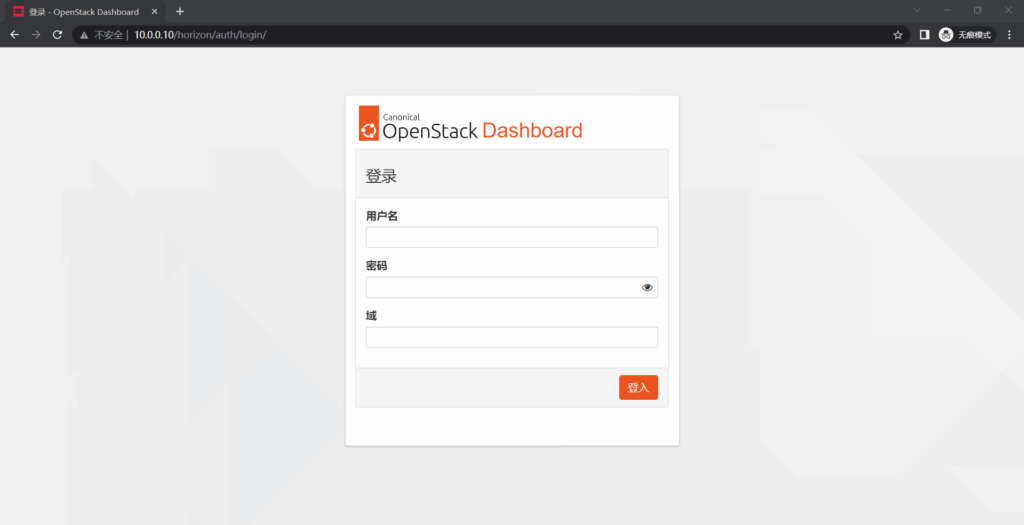

9. 配置dashboard仪表盘服务

apt install -y openstack-dashboard

vim /etc/openstack-dashboard/local_settings.py

systemctl reload apache2

10. 部署配置cinder卷存储

10.1 控制节点配置

# 创建cinder数据库

openstack user create --domain default --password cinder cinder

openstack role add --project service --user cinder admin

openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

openstack endpoint create --region RegionOne volumev3 public http://www.controller.mxq001:8776/v3/%\(project_id\)s

apt install -y cinder-api cinder-scheduler

# 备份文件

su -s /bin/sh -c "cinder-manage db sync" cinder

vim /etc/nova/nova.conf

service nova-api restart

service cinder-scheduler restart

service apache2 reload

10.2 计算节点配置

apt install -y lvm2 thin-provisioning-tools

pvcreate /dev/sdb

vgcreate cinder-volumes /dev/sdb

修改lvm.conf文件

作用:添加接受/dev/sdb设备并拒绝所有其他设备的筛选器

vim /etc/lvm/lvm.conf

apt install -y cinder-volume tgt

# 备份配置文件

vim /etc/tgt/conf.d/tgt.conf

service tgt restart

apt install -y lvm2 thin-provisioning-tools

pvcreate /dev/sdb

vgcreate cinder-volumes /dev/sdb

修改lvm.conf文件

作用:添加接受/dev/sdb设备并拒绝所有其他设备的筛选器

vim /etc/lvm/lvm.conf

apt install -y cinder-volume tgt

# 备份配置文件

vim /etc/tgt/conf.d/tgt.conf

service tgt restart

10.3 校验cinder

root@controller:~# openstack volume service list

11. 运维实战

11.1 加载openstack环境变量

source /etc/keystone/admin-openrc.sh

11.2 创建路由器

openstack router create Ext-Router

11.3 创建Vxlan网络

openstack network create --provider-network-type vxlan Intnal

openstack subnet create Intsubnal --network Intnal --subnet-range 166.66.66.0/24 --gateway 166.66.66.1 --dns-nameserver 114.114.114.114

11.4 将内部网络添加到路由器

openstack router add subnet Ext-Router Intsubnal

11.5 创建Flat网络

openstack network create --provider-physical-network physnet1 --provider-network-type flat --external Extnal

openstack subnet create Extsubnal --network Extnal --subnet-range 192.168.3.0/24 --allocation-pool start=192.168.3.100,end=192.168.3.200 --gateway 192.168.3.1 --dns-nameserver 114.114.114.114 --no-dhcp

11.6 设置路由器网关接口

openstack router set Ext-Router --external-gateway Extnal

11.7 开放安全组

# 开放icmp协议

11.8 上传镜像

openstack image create cirros04 --disk-format qcow2 --file cirros-0.4.0-x86_64-disk.img

11.9 创建云主机

ssh-keygen -N ""

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

openstack flavor create --vcpus 1 --ram 512 --disk 1 C1-512MB-1G

openstack server create --flavor C1-512MB-1G --image cirros --security-group default --nic net-id=4a9567c0-f2bb-4b11-a9db-5a33eb7d3192 --key-name mykey vm01

openstack floating ip create Extnal

openstack server add floating ip vm01 192.168.3.118

openstack console url show vm01

11.10 创建卷类型

openstack volume type create lvm

11.11 卷类型添加元数据

cinder --os-username admin --os-tenant-name admin type-key lvm set volume_backend_name=lvm

11.12 查看卷类型

openstack volume type list

11.13 创建卷

openstack volume create lvm01 --type lvm --size 1

11.14 卷绑定云主机

nova volume-attach vm01 2ebc30ed-7380-4ffa-a2fc-33beb32a8592

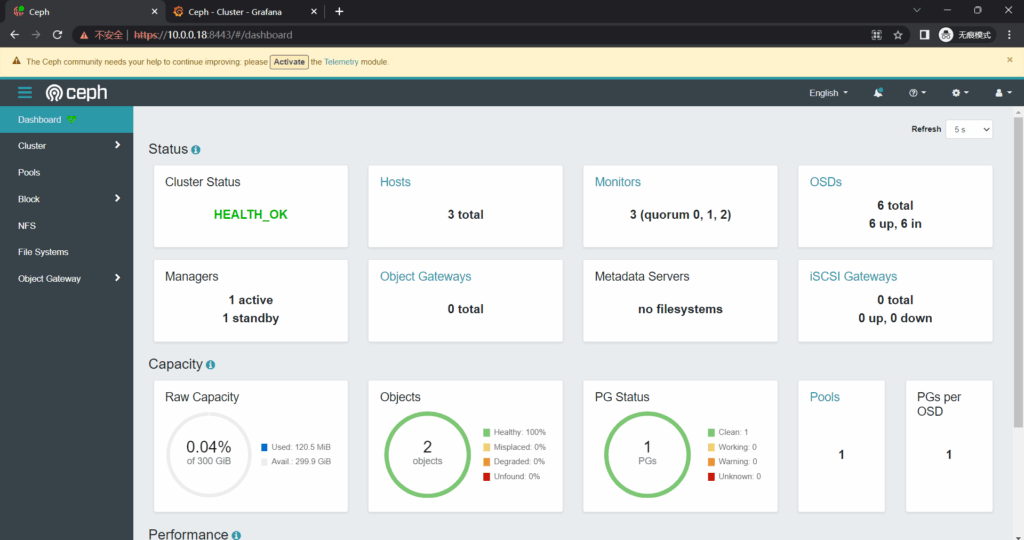

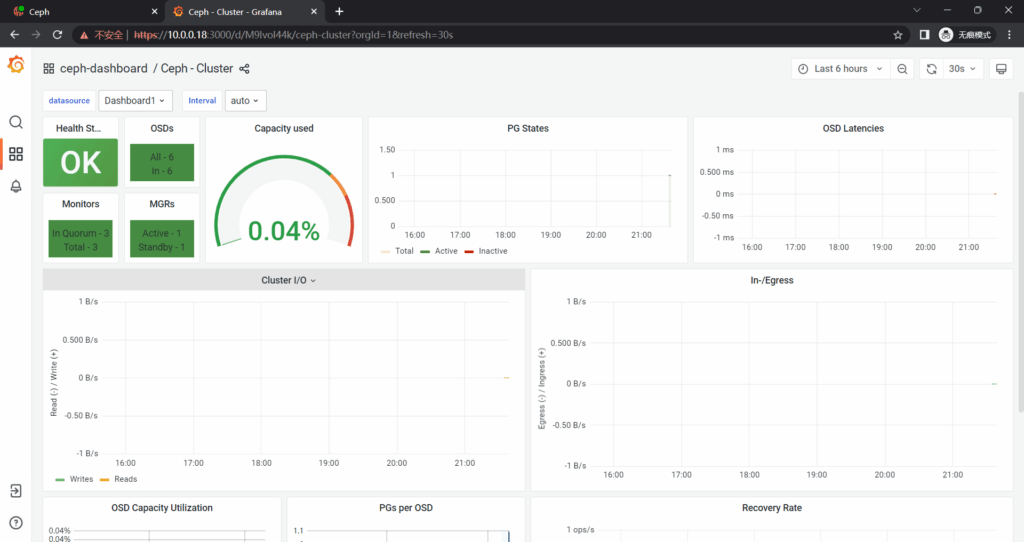

二. Ceph集群部署

1. 环境准备

主机名 IP 磁盘 CPU memory node1 10.0.0.18 sda:100G,sdb:50G,sdc:50G 2C 4G node2 10.0.0.19 sda:100G ,sdb:50G,sdc:50G 2C 4G node3 10.0.0.20 sda:100G ,sdb:50G,sdc:50G 2C 4G

操作系统 虚拟化工具 Ubuntu22.04 VMware15

1.1 配置地址

cat > /etc/netplan/00-installer-config.yaml << EOF

cat > /etc/netplan/00-installer-config.yaml << EOF

cat > /etc/netplan/00-installer-config.yaml << EOF

1.2 更改主机名

hostnamectl set-hostname storage01.mxq001

hostnamectl set-hostname storage02.mxq001

hostnamectl set-hostname storage03.mxq001

2. 配置hosts解析(所有节点)

cat >> /etc/hosts <<EOF

3. 制作离线源(所有节点)

tar zxvf ceph_quincy.tar.gz -C /opt/

4. 配置时间同步

# 可配置开启

# 安装服务

# 安装服务

5. 安装docker(所有节点)

apt -y install docker-ce

6. 安装cephadm(node1)

apt install -y cephadm

7. 导入ceph镜像(所有节点)

docker load -i cephadm_images_v17.tar

7.1 搭建制作本地仓库(node1)

# 导入镜像

cat >> /etc/docker/daemon.json << EOF

docker tag 0912465dcea5 www.storage01.mxq001:5000/ceph:v17

docker push www.storage01.mxq001:5000/ceph:v17

7.2 配置私有仓库

cat >> /etc/docker/daemon.json << EOF

8. 引导集群(node1)

mkdir -p /etc/ceph

9. 安装ceph-common工具(node1)

apt install -y ceph-common

10. 添加主机到集群(node1)

ssh-copy-id -f -i /etc/ceph/ceph.pub storage02

ceph orch host add storage02

11. 部署OSD

# 查看可用的磁盘设备

12. 访问仪表盘查看状态

三. OpenStack对接Ceph平台

1. 创建后端需要的存储池

1.1 cinder卷的存储池

ceph osd pool create volumes 32

1.2 glance存储池

ceph osd pool create images 16

1.3 备份存储池

ceph osd pool create backups 16

1.4 创建实例存储池

ceph osd pool create vms 16

2. 创建后端用户

2.1 创建密钥

cd /etc/ceph/

在ceph上创建cinder、glance、cinder-backup、nova用户创建密钥,允许访问使用Ceph存储池

2.1.1 创建用户client.cinder

对volumes存储池有rwx权限,对vms存储池有rwx权限,对images池有rx权限

ceph auth get-or-create client.cinder mon "allow r" osd "allow class-read object_prefix rbd_children,allow rwx pool=volumes,allow rwx pool=vms,allow rx pool=images"

2.1.2 创建用户client.glance

ceph auth get-or-create client.glance mon "allow r" osd "allow class-read object_prefix rbd_children,allow rwx pool=images"

2.1.3 创建用户client.cinder-backup

ceph auth get-or-create client.cinder-backup mon "profile rbd" osd "profile rbd pool=backups"

2.2 创建存放目录

mkdir /etc/ceph/

mkdir /etc/ceph/

mkdir /etc/ceph/

2.3 导出密钥

ceph auth get client.glance -o ceph.client.glance.keyring

ceph auth get client.cinder -o ceph.client.cinder.keyring

ceph auth get client.cinder-backup -o ceph.client.cinder-backup.keyring

2.4 拷贝密钥

2.4.1 控制节点准备

scp ceph.client.glance.keyring root@www.controller.mxq001:/etc/ceph/

scp ceph.client.cinder.keyring root@www.controller.mxq001:/etc/ceph/

scp ceph.conf root@www.controller.mxq001:/etc/ceph/

2.4.2 计算节点准备

scp ceph.client.cinder.keyring root@www.compute01.mxq001:/etc/ceph/

拷贝cinder-backup密钥(backup服务节点)

scp ceph.client.cinder-backup.keyring root@www.compute01.mxq001:/etc/ceph/

scp ceph.conf root@www.compute01.mxq001:/etc/ceph/

3. 计算节点添加libvirt密钥

3.1 compute01添加密钥

生成密钥(PS:注意,如果有多个计算节点,它们的UUID必须一致)

cd /etc/ceph/

[root@compute01 ~]# virsh secret-define --file secret.xml

# 将key值复制出来

virsh secret-list

3.2 compute02添加密钥

生成密钥(PS:注意,如果有多个计算节点,它们的UUID必须一致)

cd /etc/ceph/

[root@compute02 ~]# virsh secret-define --file secret.xml

# 将key值复制出来

virsh secret-list

4. 安装ceph客户端

主要作用是OpenStack可调用Ceph资源

controller节点

apt install -y ceph-common

apt install -y ceph-common

apt install -y ceph-common

5. 配置glance后端存储

chown glance.glance /etc/ceph/ceph.client.glance.keyring

vim /etc/glance/glance-api.conf

apt install -y python3-boto3

service glance-api restart

openstack image create cirros04_v1 --disk-format qcow2 --file cirros-0.4.0-x86_64-disk.img

rbd ls images

6. 配置cinder后端存储

更改cinder密钥属性(controller、compute01、compute02节点)

chown cinder.cinder /etc/ceph/ceph.client.cinder.keyring

vim /etc/cinder/cinder.conf

修改配置文件(compute01、compute02存储节点)

vim /etc/cinder/cinder.conf

openstack volume type create ceph

cinder --os-username admin --os-tenant-name admin type-key ceph set volume_backend_name=ceph

openstack volume type list

openstack volume create ceph01 --type ceph --size 1

rbd ls volumes

7. 配置卷备份

compute01、compute02节点

安装服务

apt install cinder-backup -y

chown cinder.cinder /etc/ceph/ceph.client.cinder-backup.keyring

vim /etc/cinder/cinder.conf

service cinder-backup restart

openstack volume backup create --name ceph_backup ceph01

rbd ls backups

8. 配置nova集成ceph

compute01、compute02节点

修改配置文件

vim /etc/nova/nova.conf

apt install -y qemu-block-extra

service nova-compute restart

openstack server create --flavor C1-512MB-1G --image cirros04_v1 --security-group default --nic net-id=$(vxlan网络id) --key-name mykey vm02

rbd ls vms

8.1 热迁移配置

compute01、compute02节点

配置监听地址

vim /etc/libvirt/libvirtd.conf

vim /etc/default/libvirtd

systemctl mask libvirtd.socket libvirtd-ro.socket libvirtd-admin.socket libvirtd-tls.socket libvirtd-tcp.socket

service libvirtd restart

service nova-compute restart

测试是否能互相通信连接

compute01连接compute02

virsh -c qemu+tcp://www.compute02.mxq001/system

virsh -c qemu+tcp://www.compute01.mxq001/system

openstack server list

openstack server show 1f6dd9b8-7700-43a7-bd1f-0695e0de4a04

nova live-migration openstack server show 1f6dd9b8-7700-43a7-bd1f-0695e0de4a04 www.compute02.mxq001

发表回复